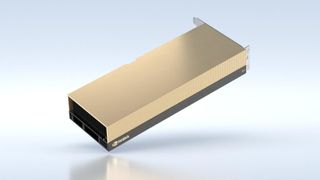

These new Nvidia mini GPUs will help bring AI to the datacenter

New Nvidia GPUs plug into the PCIe slot for instantaneous performance boost

In its bid to reduce the barrier of entry for high performance computing, Nvidia has announced two new GPUs that will happily plug into both existing enterprise servers and workstations.

The A10 and A30 GPUs are both based on the Ampere architecture and will slot in one or two full-length PCIe ports, respectively.

Speaking to The Register, Manuvir Das, Nvidia’s head of enterprise computing, reasoned that the cards are the manifestation of Nvidia’s attempts to give users the means to tackle Artificial Intelligence (AI) workloads on typical datacenter hardware and racks.

- These are the best cloud computing services

- We’ve also rounded up the best cloud analytics platforms

- Here’s our selection of the best cloud hosting providers

Affordable compute

Das further assures that Nvidia’s Kubernetes-based EGX platform for machine learning at the edge of the network will work perfectly well with the new GPUs.

Reportedly, Nvidia has been able to tie up with many of the big server-makers including Dell, Lenovo, H3C, Inspur, QCT and Supermicro to package the A10 and A30 inside their enterprise and datacenter offerings.

The new GPUs cost about $2000 and $3000 respectively. The A10 is rated at 150W TDP and thanks to 24GB GDDR6 memory can produce 31.2 single-precision teraFLOPS. On the other hand, the A30 is rated at 165W and with the help of 24GB high bandwidth memory v2 delivers 10.3 single-precision teraFLOPS.

While the A10 will go on sale later this month, the A30 is expected to launch sometime later this year.

Are you a pro? Subscribe to our newsletter

Sign up to the TechRadar Pro newsletter to get all the top news, opinion, features and guidance your business needs to succeed!

- We've also featured the best cloud management software

Via: The Register

With almost two decades of writing and reporting on Linux, Mayank Sharma would like everyone to think he’s TechRadar Pro’s expert on the topic. Of course, he’s just as interested in other computing topics, particularly cybersecurity, cloud, containers, and coding.

Most Popular