How we test TVs at TechRadar

Putting the best TVs to the test

When we review the best TVs at TechRadar, each TV is put through its paces in a series of tests that combine the subjective opinions of the reviewer with objective measurements made using specialized testing equipment.

During our subjective tests, we do casual viewing using broadcast, streaming, and Blu-ray (HD, and 4K) sources with the set’s most accurate picture mode active to run in the TV and to make a general assessment of picture quality. We then take a more critical approach to viewing by analyzing contrast, black levels, color, screen uniformity, and more. We also assess the quality of the TV's built-in audio, cosmetic design, smart TV platform, gaming features, and value for money.

Below, you’ll find a breakdown of each objective test, along with more detailed descriptions of our subjective testing process and how we test the best gaming TVs.

How we test TV brightness

We measure brightness on a TV for two reasons: to evaluate how well it can overcome reflections from light sources in the same viewing area; and how well it can reproduce highlights in HDR sources.

To do this, we measure across a range of 100 IRE (full white) test patterns in different sizes, with the two most important being 10% of the screen’s total area, which gives us an indication of the TV’s HDR peak brightness capability, and 100% (full-screen brightness), which tells us how well the TV can handle reflections and sustain brightness across the whole screen when viewing programs such as sports. These are measured in ‘nits’ (also known as cd/m2 or candela per meter squared), and our measurements are included in our reviews.

Our peak and full-screen brightness measurements are made using a test pattern generator to create consistent light patterns, and a colorimeter to measure them. This happens in a dark environment to prevent any light sources from affecting the measured result.

Read more about how we test TV brightness

Get the best Black Friday deals direct to your inbox, plus news, reviews, and more.

Sign up to be the first to know about unmissable Black Friday deals on top tech, plus get all your favorite TechRadar content.

How we test TV color gamut

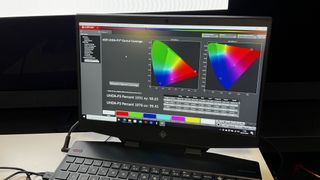

The color gamut coverage of a TV means how wide a range of colors it can show, to match the range possible from HDR videos. It’s expressed as a percentage, so 100% color gamut support means it can show every possible color that HDR videos can include. When measuring a TV’s color gamut coverage, we record two results: BT.2020 and UHDA-P3, the latter of which is used for mastering 4K Blu-ray and digital cinema releases. When we measure these, we are looking for a UHDA-P3 result of 95% and above, as that indicates excellent color gamut coverage. BT.2020 is a standard that has helped inform HDR TV and video development.

To record these results, we use the same methodology as for brightness, except the test pattern generator here displays a sequence of colors that the colorimeter will measure to indicate the TV’s gamut coverage. Results are shown in Portrait Displays’ Calman software.

Read more about how we test TV color gamut coverage

How we test TV accuracy

When testing a TV’s accuracy, we focus on two areas: grayscale and color. Grayscale refers to shades of gray between black and white, and measuring these can determine a TV’s contrast ratio and how accurately it displays shades of light and dark. Color accuracy determines how accurately a TV shows different hues on screen, meaning whether the colors are true to the source.

To determine grayscale and color accuracy, we record Delta-E values in Calman, which indicates the level of difference between the ‘perfect’ test pattern source and what is shown on-screen. Again, this is done using our test pattern generator and our colorimeter. We express the results in a number, where lower is better. We consider a result below 3 to be excellent.

Read more about how we test TV accuracy

How we test TV input lag

Input lag refers to the time elapsed between a source sending a signal to a display and the display showing it on screen. For gaming, particularly professional or competitive gaming, a low input lag time is crucial. TVs nowadays come with lots of digital processing which can increase input lag, so many sets provide a built-in ‘game mode’ to reduce it for optimal gaming performance.

To test input lag on TVs, we put the TV into its game mode equivalent and then use a Leo Bodnar 4K HDMI Video Signal Lag Tester to generate a test pattern and record the results by holding its sensor up to the middle bar of a three-bar pattern that appears on screen (the middle bar is the equivalent of taking an average of the others bars). The Leo Bodnar box sends out a signal and then measures the time it takes to detect that signal itself, displaying the time taken on the screen. This is measured in milliseconds. After the results stabilize and several readings are taken, we record the result.

Read more about how we test TV input lag

How our subjective testing works

As mentioned above, we first ‘run in’ each TV by running video on it for 12 hours, because the nature of a TV’s images can change when it’s first used. During this period, we will cycle through the TV’s preset picture modes – often named Standard, Movie, Vivid, and so on – and select one that looks best for most sources across the board. (We later measure these modes to determine which is most accurate, and that mode is used for final tests.)

Next, we take a more critical approach to viewing, looking at colors and how vibrant and realistic they are. For contrast and black levels, we look to see if the TV can provide a true representation of darker tones (blacks that actually look like black and not an elevated gray, or gray tones that still have nuance and don’t lose fine detail into blackness). With LCD models, we judge black uniformity (the TV’s ability to display black evenly across the screen), checking for any backlight blooming (the ‘halo effect’) or cloudiness. We also evaluate picture uniformity across a range of viewing positions, looking for contrast and color fade at off-center seats.

Detail is another important aspect of picture quality. We look at how true-to-life pictures are and check that details aren’t over-enhanced. We also test motion processing, noting any judder, blur, or stuttering, and not just with faster-paced sources such as sports but also with 24fps movies.

We often use the same reference scenes on 4K Blu-ray and streaming services such as Netflix, Prime Video, and Disney Plus for testing. That’s because using the same scenes provides us with a reference for comparison and can quickly demonstrate the strengths and weaknesses of a particular TV.

We also test a TV’s upscaling ability, using lower-resolution sources. Again, we’re looking for how natural the detail appears once it’s been processed and upscaled, or how natural colors appear if they’ve been upscaled from SDR to HDR.

But it’s not just picture quality that we test. We also evaluate a TV’s built-in audio since a TV’s internal speakers are important for those who don’t want to buy one of the best soundbars. We check mainly for power, clarity of speech, and the effectiveness of any virtual surround sound processing, including Dolby Atmos effects if the TV is compatible with that format.

We also consider design, from overall build quality and the included stand to the depth and profile of its bezel. We test the smart TV platform and menus, looking at ease of use, responsiveness, range of features, and how easily settings can be adjusted to tailor the TV to the user’s preferences.

How we test a TV’s gaming features and performance

We look at several features when testing a TV’s gaming performance. Many of the best gaming TVs come with HDMI 2.1 ports, which provide eARC compatibility for soundbars and features designed for next-gen video game consoles like the Xbox Series X and PS5.

Those features include higher refresh rates of 120Hz and 144Hz (for PC gaming), which provide a more responsive gaming experience. There is also VRR, a feature designed to reduce screen tearing and judder while gaming (although it’s worth noting that VRR does not require HDMI 2.1 to work). In some cases, a TV will support both AMD FreeSync and Nvidia G-Sync, which are different types of VRR. Finally, there is ALLM, which automatically sets an ideal latency after detecting a game console connected to the TV.

We make sure during testing to place a TV in its optimum ‘Game Mode’, as this will activate the best settings for gaming. We then play using an Xbox Series X or PS5 console to ensure the gaming features work in practice, while also looking at visual and audio performance from games, smoothness, and response times.

Al Griffin has been writing about and reviewing A/V tech since the days LaserDiscs roamed the earth, and was previously the editor of Sound & Vision magazine.

When not reviewing the latest and greatest gear or watching movies at home, he can usually be found out and about on a bike.

- James DavidsonTV Hardware Staff Writer, Home Entertainment

Most Popular