Inside the Linux kernel 3.0

Linux has gone from 10,000 lines of code to 15 million

The new Linux 3.0 kernel in all its shiny awesomeness will be finding its way into your favourite distro any day now. So what does this major milestone release contain to justify the jump in version number?

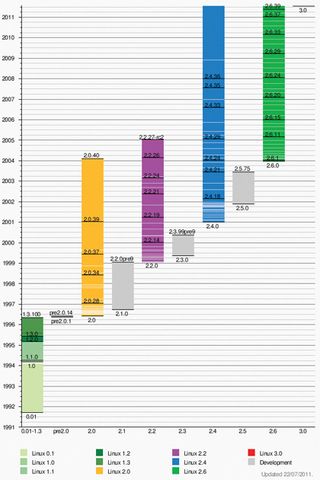

The short answer is nothing really - this is just 2.6.40 renamed. Linus Torvalds felt the numbers were getting too high, that the 2.6.* notation was getting out of hand, and that Linux had now entered its third decade, so a new number was called for.

Torvalds said: "We are very much not doing a KDE 4 or a Gnome 3 here, no breakage, no special scary new features, nothing at all like that. We've been doing time-based releases for many years now, this is in no way about features. If you want an excuse for the renumbering, you really should look at the time-based one (20 years) instead."

The old numbering system used the first digit for the major release - which has been 2 for what seems like forever - and the second for the minor release, using odd numbers to show development versions and even numbers for stable releases. While 2.4 was the main kernel in use, 2.5 was the development version of 2.6. The third digit was the minor version. Then they added a fourth digit for backported patches to the stable releases and it all got messy, especially as the first two numbers stopped changing.

Now 3 is the major version, the second number is the minor release and patches for stable releases get the third digit when needed. Currently we are on 3.0 - in Torvalds's own words "gone are the 2.6. days".

The main reason for the escalating numbers, apart from the fact that the 2.6 line is almost eight years old, is a change in release philosophy. Releases used to be based on features; when the new features were stable and tested, a new kernel would be released - the "when it's ready" approach.

For example, 1.0 saw the introduction of networking (it's hard to imagine Linux without networking at its core nowadays), 1.2 saw support for multiple platforms - adding Alpha and m68k to the original i386, while 2.0 added support for SMP - multiple processors.

Get the best Black Friday deals direct to your inbox, plus news, reviews, and more.

Sign up to be the first to know about unmissable Black Friday deals on top tech, plus get all your favorite TechRadar content.

The last one is an example of how the landscape has changed yet Linux has remained relevant; SMP was added for the 'big iron' multiple processor servers yet now we have dual core CPUs in phones. Waiting for features to be complete could lead to long gaps between releases.

Nowadays the kernel undergoes steady evolution and has a new release every eight to ten weeks - more of a "whatever is ready" approach. If a new feature or driver isn't ready in time for the deadline, it's left until the next release.

If nothing major has changed since 2.6.39, what have the kernel developers been doing? With a new release every few months, the changes between adjacent releases are rarely outstanding on their own, but the cumulative effect is significant, so what has changed over the past year or two?

A list of the new hardware supported by the kernel would fill the magazine. With plenty of encouragement and guidance from the likes of Greg Kroah-Hartman, more hardware manufacturers than ever are working with kernel developers to ensure good support.

Drivers and support for hardware are being added almost as fast as the manufacturers can get it on the shelves. From wireless cards to webcams, and even the Microsoft Kinect in Linux 3.0, there is a huge range of hardware supported out of the box nowadays.

It's not just USB or PCI-connected hardware either; the ability to compile a slimmed-down kernel with only the features and drivers you need is what makes Linux ideal for embedded devices. From mobile phones and network routers to car entertainment systems, they all have their own hardware and the kernel supports it.

Fine lines

In November 2010, the internet lit up with discussion and counter-argument when news broke of "the 200 Line Linux Kernel Patch That Does Wonders". Designed to improve desktop responsiveness, this patch separates tasks launched from different terminals or environments into groups and ensures that no single group gets to monopolise the CPU.

In real terms, this means that an intensive task running in the background, such as software compilation (naturally, Linus tested it with a kernel compile) or video transcoding, will not bring your browser to its knees. It means the days of heavy system load manifesting in jerky windows or text scrolling are largely behind us.

What makes this so interesting (apart from the fact that a marked change to the desktop was made in a couple of hundred lines of code) is that it requires nothing from the user as long as you have a kernel with this code enabled, which means that any distro-supplied kernel from 2.6.38 onwards. It also makes a difference on any type of hardware, from an Atom-powered netbook to a six-cored monster.

The kernel has certainly grown over the years. One of the methods of measuring the size of a program's code base is the SLOC, or source lines of code, the amount of code that has been written. It is hardly surprising that this has grown with each release, although you may be staggered by the overall increase:

Version 0.01 had 10,239 lines of code

1.0.0 had 176,250 lines of code

2.2.0 had 1,800,847 lines of code

2.4.0 had 3,377,902 lines of code

2.6.0 had 5,929,913 lines of code

3.0 has 14,647,033 lines of code

Yes, you read that right, Linux has grown from 10,000 to 15 million lines of code. The code base has more than doubled since the introduction of the first 2.6 kernel back in December 2003.

These line counts are the sum total of all files in the source tarball, including the documentation. Considering that most programmers find writing documentation far more of a chore than writing code, this seems a reasonable measurement.

Before anyone starts crying "bloat!", remember that Linux is not a true monolithic kernel - drivers for most hardware are provided as loadable modules, which don't even need to be compiled for most systems, let alone installed or loaded.

Much of the growth in the size of the kernel source has come from the increasingly comprehensive hardware support. One of the misconceptions about open source software, fostered by its detractors, is that because it is free to use, it is somehow amateur and of lower quality. An analysis of the code contributed during 2009, which involved some 2.8 million lines and 55,000 major changes, showed that three quarters of the contributions came from developers employed to work on Linux.

It was no surprise that the top contributor was Red Hat (12%), followed by Intel (8%), IBM and Novell (6% each). Despite competing with one another, these companies also recognise the importance of co-operation. Naturally, each company develops areas that are beneficial to its own needs, but we all gain from that.

Most Popular